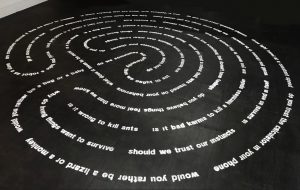

Moral Labyrinth

By Sarah Newman & Jessica Fjeld

Would you trust a robot trained on your behaviors? How will we know when a machine becomes sentient? What does it mean to be moral?

–excerpts from Moral Labyrinth

As machines get smarter, more complex, and able to operate autonomously in the world, we’ll need to program them with certain “values.” Yet we do not agree on what we value: across cultures, across individuals, even within ourselves. We often do not act in accordance with what we say we value, so should these systems learn from what we say or what we do? What are the implications of how our current belief systems manifest in the swiftly approaching technological future? As we anticipate such change, can we use this technological moment to become more honest, humble, and compassionate?

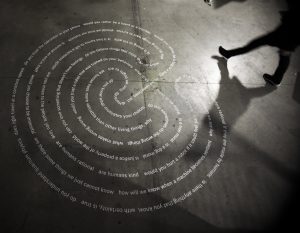

Moral Labyrinth is a 5×5 meter art installation that takes shape as a physical walking labyrinth, comprised of philosophical questions, that deal, either directly or obliquely, with our complex relationships to technology, and more specifically with the machines that we build to serve us. The inspiration for the work and its form are taken from our increasingly complex and self-reflective relationships to emerging technologies, drawing inspiration as well from moral philosophy and socratic dialogue. What can we learn about ourselves by how we engage and interact with technology? What new questions will arise for us after walking the labyrinth? The work is a meditation on perennial—and now particularly pressing—aspects of being human.

For the Labyrinth’s installation at WeRobot, Sarah Newman has collaborated with Jessica Fjeld and Jessica Yurkofsky to create a miniature labyrinth, broken into pieces, which conference attendees will receive in the form of an invitation: an invitation to engage with the work and with each other, and to explore their own relationships to our compex moral world.