Administering Artificial Intelligence

Alicia Solow-Niederman

Michael Froomkin will lead a discussion of Alicia Solow-Niederman‘s Administering Artificial Intelligence on Saturday, April 13, at 11:00 a.m. at #werobot 2019.

Calls for sector-specific regulation or the creation of a federal agency or commission to guide and constrain artificial intelligence, or AI, development are increasing. This turn to administrative law is understandable because AI’s regulatory challenges seem similar to those in other technocratic domains, such as the pharmaceutical industry or environmental law. But an “FDA for algorithms” or federal robotics commission is not a cross-cutting AI solution. AI is unique, even if it is not entirely different. AI’s distinctiveness comes from technical attributes (speed, complexity, and unpredictability) that strain traditional administrative law tactics, in combination with institutional settings and incentives, or strategic context, that affect its development path.

Michael Froomkin

This Article puts American AI governance in strategic context. Today, there is an imbalance of state and non-state AI authority. Commercial actors dominate research and development and private resources outstrip public investments. Even if we could redress this baseline, a fundamental, yet under-recognized problem remains. Any governance strategy must contend with the ways in which algorithmic applications permit seemingly technical decisions to de facto regulate human behavior, with a greater potential for physical and social impact than ever before. When coding choices functionally operate as policy in this manner, the current trajectory of AI development augurs an era of private governance. Without rethinking our regulatory strategies, we risk losing the democratic accountability that is at the heart of public governance.

The Human/Weapon Relationship in the Age of Autonomous Weapons and the Attribution of Criminal Responsibility for War Crimes

Marta Bo

Madeleine Elish will lead a discussion of Marta Bo‘s The Human/Weapon Relationship in the Age of Autonomous Weapons and the Attribution of Criminal Responsibility for War Crimes on Saturday, April 13, at 4:00 p.m. Friday, April 12, at 1:45 p.m. at #werobot 2019.

This paper will inquire into how the advent of artificial intelligence in warfare impacts the human-weapon command-and-control relationship and how this relationship may alter the mental state of the soldiers and/or commanders charged with deploying LAWS. In particular, this may include an inability to understand and assess the risk inherent in the deployment of LAWS due to their complexity and unpredictability, over reliance on information that may be subject to automation bias or alternatively lack of trust in LAWS leading to relevant information being ignored.

Madeleine Elish

Assessment of these new features against mens rea standards raises questions of whether these features alter the perpetrator’s awareness of risks, his/her failure to perceive risks and subjective attitude towards these risks. These elements will inform the assessment of the mental state of the accused which may be determinative for the ascription of criminal responsibility.

Emerging Legal and Policy Trends in Recent Robot Science Fiction

Robin Murphy

Kevin Bankston will lead a discussion of Robin Murphy‘s Emerging Legal and Policy Trends in Recent Robot Science Fiction on Saturday, April 13, at 8:30 a.m. at #werobot 2019.

Science fiction has a long history of predicting technological advances and reflecting societal concerns or expectations. This paper examines popular print science fiction for the past five years (2013-2018) where robots were essential to the fictional narrative and the plot depended on a legal or policy issue related to robots. Five books and one novella series were identified: Head On (Scalzi), The Robots of Gotham (McAulty), Autonomous (Newitz), The Murderbot Diaries (Wells), A Closed and Common Orbit (Chambers), and Provenance (Leckie).

An analysis of these works of fiction shows four new concerns about robots that are emerging in the public consciousness.

Kevin Bankston

One is that robots will enable false identities through telepresence and that people will be vulnerable to deception. A second concern is under what conditions would sentient robots be granted the rights of citizenship or at least protection. A third is that outlawing artificial intelligence for robots will not protect the citizenry from undesirable uses but will instead put a country at risk from other countries that continue to embrace AI. The fourth concern is that ineffectual or non-existent product liability laws will lead to sloppy software engineering that produces unintended dangerous robot behaviors and increases cyber vulnerabilities.

Robots in Space: Sharing Our World with Autonomous Delivery Vehicles

Mason Marks

Kristen Thomasen will lead a discussion of Mason Marks‘s Robots in Space: Sharing Our World with Autonomous Delivery Vehicles on Friday, April 12, at 3:30 p.m. at #werobot 2019.

Industrial robots originated in mid-Twentieth Century factories where they increased the efficiency of manufacturing. Their implementation was an extension of earlier industrial automation such as the introduction of Henry Ford’s mechanized assembly line in 1913. In Ford’s assembly line, a rope-and-pully system advanced each vehicle from one worker to the next allowing each worker to remain stationary.Half a century later, in 1961, the first robotic arm, created by Unimate, was introduced to auto manufacturing, which further increased efficiency. More recently, following advancements in artificial intelligence and sensor technology, industrial robots have acquired greater autonomy and transformed the logistics and delivery industries. Like Ford’s assembly line, and Unimate’s robotic arm, Amazon’s fulfillment center robots, originally designed by Kiva Robotics, reduced the daily steps workers must take. Instead of walking through aisles to stock warehouse shelves or retrieve products for distribution, workers remain stationary, and the robots bring the products to them. Today, with even greater autonomy than their predecessors, robots are migrating out of factories, warehouses, and fulfillment centers and into neighborhood streets, sidewalks, and skies. The technological advancements that allowed robots to automate private industrial spaces, such as machine learning and sophisticated sensors, now enable autonomous delivery robots (ADVs) to travel independently in the outside world and deliver packages, meals, groceries, and other retail purchases to people’s homes.

Kristen Thomasen

This article focuses on the evolution of ADVs used for “last-mile delivery,” the final step of the delivery process that ends at the customer’s door. It breaks ADVs down into four different categories: unmanned aerial vehicles (UAVs or “drones”); self-driving cars; autonomous delivery pods; and sidewalk delivery robots, which are sometimes called personal delivery robots (PDRs). The article describes the risks and benefits of deploying ADVs for last-mile delivery and analyzes the laws and federal agencies that regulate them. Last mile delivery is generally thought to be “the most expensive and time-consuming part of the shipping process” because it is the most personalized and unpredictable. Industry estimates suggest that last-mile delivery can account for up to 53 percent of total shipping costs. ADV manufacturers claim they can reduce delivery time, increase efficiency, cut costs, improve the consumer experience, decrease traffic congestion, reduce carbon emissions, assist seniors and people with disabilities who may have decreased mobility, and democratize access to logistics and delivery resources for small businesses allowing them to compete with large corporations. Critics claim ADVs may negatively impact public health by encouraging inactivity, obstructing roads and sidewalks and impairing the mobility of seniors and people with disabilities, and endangering public safety due to their potential to collide with people who are not agile enough to get out of the way. ADVs may also reduce the need for human delivery workers, cause noise pollution, violate people’s privacy, and represent the increasing privatization of public spaces such as sidewalks. Though all ADVs will be discussed, my focus is primarily on sidewalk delivery robots because they are the newest and fastest growing segment of the ADV industry, and they face the fewest legal and regulatory hurdles. Particular attention will be paid to the differences between the laws that regulate sidewalk delivery robots and the laws that govern other types of ADVs. The article concludes by drawing lessons from the regulation of UAVs and self-driving cars to propose legislation to regulate sidewalk delivery robots that will increase their safety and utility while limiting the privatization of public spaces.

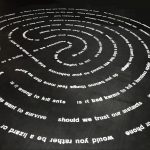

The Moral Labyrinth

We’ve put up a page about our special art installation, ‘The Moral Labyrinth” by Sarah Newman & Jessica Fjeld, which will be on display during We Robot 2019. Check it out.

We’ve put up a page about our special art installation, ‘The Moral Labyrinth” by Sarah Newman & Jessica Fjeld, which will be on display during We Robot 2019. Check it out.

Death of the AI Author

Carys Craig

Kate Darling will lead a discussion of Carys Craig and Ian Kerr‘s Death of the AI Author on Friday, April 12, at 1:45 p.m. Saturday, April 13, at 4:00 p.m. at #werobot 2019.

Much of the second-generation literature on AI and authorship asks whether an increasing sophistication and independence of generative code should cause us to rethink embedded assumptions about the meaning of authorship, arguing that recognizing the authored nature of AI-generated works may require a less profound doctrinal leap than has historically been suggested. In this essay, we argue that the threshold for authorship does not depend on the evolution or state of the art in AI or robotics.

Instead, we contend that the very notion of AI-authorship rests on a category mistake: it is not an error about the current or potential capacities, capabilities, intelligence or sophistication of machines; rather it is an error about the ontology of authorship.

Ian Kerr

Kate Darling

The ontological question, we suggest, requires an account of authorship that is relational; it necessitates a vision of authorship as a dialogic and communicative act that is inherently social, with the cultivation of selfhood and social relations as the entire point of the practice. Of course, this ontological inquiry into the plausibility of AI-authorship transcends copyright law and its particular doctrinal conundrums, going to the normative core of how law should—and should not—think about robots and AI, and their role in human relations.

AI, Professionals, and Professional Work: The Practice of Law with Automated Decision Support Technologies

Deirdre Mulligan

Madeleine Elish will lead a discussion of Deirdre Mulligan and Daniel Kluttz‘s AI, Professionals, and Professional Work: The Practice of Law with Automated Decision Support Technologies on Saturday, April 13, at 4:00 p.m. Friday, April 12, at 1:45 p.m. at #werobot 2019.

Technical systems employing algorithms are shaping and displacing human decision making in a variety of fields. As technology reconfigures work practices, researchers have documented potential loss of human agency and skill, confusion about responsibility, diminished accountability, and both over- and under-reliance on decision-support systems. The introduction of predictive algorithm systems into professional decision making compounds both general concerns with bureaucratic inscrutability and opaque technical systems as well as specific concerns about encroachments on expert knowledge and (mis-)alignment with professional logics, liability frameworks, and ethics. To date, however, we have little empirical data regarding how automated decision-support tools are being debated, deployed, used, and governed in professional practice.

Daniel Kluttz

The objective of our ongoing empirical study is to analyze the organizational structures, professional rules and norms, and technical system properties that shape professionals’ understanding and engagement with such systems in practice. As a case study, we examine decision-support systems marketed to legal professionals, focusing primarily on technologies marketed for e-discovery purposes. Generally referred to as “technology-assisted review” (TAR), and more specifically as “predictive coding,” these systems increasingly rely on machine-learning techniques to classify and predict which of the voluminous electronic documents subject to litigation should be withheld or produced to the opposing side. We are accomplishing our objective through in-depth, semi-structured interviews of experts in this space: the technology company representatives who develop and sell such systems to law firms and the legal professionals who decide whether and how to use them in practice.

Madeleine Elish

We report research insights about how these systems and the companies offering them are shaping relationships between lawyers and clients, how lawyers are grappling with professional obligations in light of these shifting relationships, and the ways these systems construct and display knowledge. We argue that governance approaches should seek to put lawyers and decision-support systems in deeper conversation, not position lawyers as relatively passive recipients of system wisdom who must rely on out-of-system legal mechanisms to understand or challenge them. This requires attention to both the information demands of legal professionals and the processes of interaction that elicit human expertise and allow humans to obtain information about machine decision making.

Toward a Comprehensive View of the Influence of Artificial Intelligence on International Affairs

Jesse Woo

Heather Roff will lead a discussion of Jesse Woo‘s Toward a Comprehensive View of the Influence of Artificial Intelligence on International Affairs on Friday, April 12, at 4:45 p.m. at #werobot 2019.

This essay has two objectives. The first is to widen the lens on the influences of artificial intelligence (AI) in international affairs beyond the issues that have dominated discussions of this topic so far. Using a framework developed in the discipline of Science and Technology in International Affairs, it seeks to uncover or highlight areas that have not received adequate attention. This includes but is not limited to non-military uses of AI for diplomacy and the importance of cross-border data regimes. It will also pull out broad themes and issues that may be helpful for policy makers to consider as they work with AI in the international arena.

The second objective is to counter the narrative that strong privacy rules will inevitably hurt the U.S. in a race with China to develop AI. Recent legislative actions in the U.S. and Europe (the CLOUD Act and GDPR, respectively) have shown how domestic action can have significant extraterritorial effects on data policy.

Heather Roff

Rather than engage in a race to the bottom on privacy with China, States that value democracy, rule of law, and civil liberties should strive to create rules and norms that require those values in AI development and deployment. Doing so will hopefully play to the relative strengths of AI companies in those countries, and maybe even raise the bar for the industry worldwide.

Through the Handoff Lens: Are Autonomous Vehicles No-Win for Driver-Passengers

Jake Goldenfein

Madeleine Elish will lead a discussion of Jake Goldenfein, Deirdre Mulligan, and Helen Nissenbaum‘s The Human/Weapon Relationship in the Age of Autonomous Weapons and the Attribution of Criminal Responsibility for War Crimes on Saturday, April 13, at 4:00 p.m. Friday, April 12, at 1:45 p.m. at #werobot 2019.

There is a great deal of hype around the potential social benefits of autonomous vehicles. Rather than simply challenge this rhetoric as not reflecting reality, or as promoting certain political agendas, our paper exposes how the transport models described by tech companies, car manufacturers, and researchers each generate different political and ethical consequences for users.

Deirdre Mulligan

To that end, it introduces the analytical lens of ‘handoff’ for understanding the ramifications of the different configurations of actors and components associated with different models of autonomous vehicle futures. ‘Handoff’ is an approach to tracking societal values in sociotechnical systems. It exposes what is at stake in transitions of control between different components and actors in a system, i.e. human, regulatory, mechanical or computational.

The paper identifies three archetypes of autonomous vehicles – fully driverless cars, advanced driver assist systems, and connected cars.

Helen Nissenbaum

The handoff analytic tracks the reconfigurations and reorientations of actors and components when a system transitions for the sake of ‘functional equivalence’ (for instance through automation). The claim is that, in handing off certain functions to mechanical or computational systems like giving control of a vehicle to an automated system, the identity and operation of actor-components in a system change, and those changes have ethical and political consequences.

Within each archetype we describe how the components and actors of driving systems might be redistributed and transformed, how those transformations are embedded in the human-machine interfaces of the vehicle, and how that interface is largely determinative of the political and value propositions of autonomous vehicles for human ‘users’ – particularly with respect to privacy, autonomy, and responsibility.

Madeleine Elish

Thinking through the handoff lens allows us to track those consequences and understand what is at stake for human values in the visions, claims and rhetoric around autonomous vehicles.

Artificial Intelligence Patent Infringement

Kate Darling will lead a discussion of Tabrez Ebrahim‘s Artificial Patent Infringement on Friday, April 12, at 1:45 p.m. Saturday, April 13, at 4:00 p.m. at #werobot 2019.

Tabrez Ebrahim

Artificial intelligence (A.I.) is exploding across industries for developing or delivering goods and services. Businesses and inventors have followed with seeking patent protection in A.I. The rapid rise in A.I. patent filings has not been without debate of doctrinal patent law issues with inventorship, nonobviousness, and patent eligibility of A.I. A natural, next doctrinal inquiry is to determine what could be considered patent infringement of A.I. The imitation of A.I. technology raises the question—how should infringement of A.I. patents that are not invalidated be analyzed?

Kate Darling

The technological distinction of “dynamic, trainable data sets” informs statutory interpretation of 35 U.S.C. § 271 for infringement of A.I. patents. An examination of § 271(a) direct, § 271(b) indirect (active inducement and contributory), and § 271(c) infringement of A.I. patents centers on A.I.’s autonomous ability to function without humans, to modify, and to evolve over time in response to new data. While the analysis of the patent infringement statute of A.I. generally shows that patentees would have considerable difficulty in prevailing against would be infringers, it suggests A.I.’s distortions with existing patent law framework necessitates redefining “inventors” and the notion of an infringer. The dynamic nature of A.I. also complicates the current scope of the term “divided infringement,” resulting in distributional consequences and new considerations for patent policy.